DISCLAIMER: This is a rather long post on the topic of random numbers, so …uh, sorry for that.

I want to talk about a couple of interesting things related to randomness and its many nuisances especially when applied to games. But before we get to that I guess I’ll introduce the basic notions for those of you who are not familiar with this whole thing. You can skip the first two sections if you know what a PRNG is, and how it works.

What is Random

Random is commonly defined as “unpredictable“. In general, when we are unable to find a pattern that would allow us to anticipate the outcome or occurrence of an event, we call it “unpredictable” and there’s a chance we will consider the event “random”.

You’ll also hear of “true randomness”, and things like natural atmospheric noise, lightning bolts, or particles falling from the space being used as sources and examples of it. But while we can’t currently predict when and where lightning will hit, events in the universe like electric discharges in clouds are most likely just a massively complicated function of a number of different factors, and not really something that happens with no rhyme or reason. It’s quite possible that if we were able to simulate each particle and sub-particle inside a group of storm clouds and their relevant vicinity, lightning would be trivial to anticipate with accuracy. This makes its “unpredictability” debatable, I guess.

Having said that, let’s not forget that “predictable” and “unpredictable” are relative to an “observer”. What we consider true random events could totally have a logic behind, but what matters is that from our -and our system’s- point of view, they are impossible to predict, and if the machine (or person) is unable to anticipate the occurrence of an event, the definition applies, regardless of the event’s “actual” predictability.

On a smaller scale, we see that in games, where there’s a lot of things normally being controlled by random number generators, and while their behavior is unpredictable to the player, for the computer running the game, every simulated element and entity inside the game world is a totally predictable thing. The computer knows where everything will be in the next frames or what the outcome of the next “random” event will be. Interestingly enough, player input would be considered random from the computer’s point of view, even if the player is consistently performing a repetitive sequence of button presses.

Humans, by the way, are awful at “doing” random. We are probably the most predictable thing on Earth. If I ask you to think of a random number between 1 and 20, chances are that you’ll pick your favorite number, or one that means something to you (which I could predict with very little information), or 17! Because it’s always 17!.

PRNG it on!

But it’s OK, computers are awful at this too, it’s just that they are methodically awful at it.

The only way (only?) for a computer to come up with random numbers without external aid is through a PRNG (Pseudo-Random Number Generator); an algorithm that generates a “sequence” of seemingly random numbers starting from one called the “seed“. Depending on the algorithm this seed may be a matrix, a number, a vector, etc.

The PRNG function applies a math transformation to the seed, so it becomes (or can derive from it) a completely different (and seemingly random) number. The result is returned of course, but this also leaves the seed “transformed” and ready to be used by the PRNG again, when a new random number is requested.

This process gives you an endless sequence of apparently random numbers. The “quality” (uniformity and periodicity) of this sequence depends on the algorithm you are using. The key here is that the generated numbers will “look” random because there’s not an obvious correlation between one number and the next, but any time you feed the same seed to the same PRNG, you’ll get the exact same sequence of numbers. Every time, which in the great scheme of things is like the opposite of “unpredictable”.

This “feature” is actually useful for encryption, data compression, procedural generation, etc, etc but as I hinted before, what we see as random in this case is actually something completely controlled and predictable. You can tell ahead of time the next number if you know the PRNG and the seed!

Of course users of your software won’t (normally) know the seed nor the algorithm used, so they have little to no chance of predicting the behavior of your system (except when they hack into your game’s code and then devise methods to abuse and control your PRNG… like it has happened in a number of games already).

But enough talk, have at you!

There are many PRNG algorithms with different properties, but most of them will (theoretically) generate a “uniform” sequence of numbers over time, meaning that each possible number that they can generate will appear pretty much the same number of times in a sequence. Uniformity is an important property for a lot of applications, so let’s run some experiments!

C just as many other languages has a way of generating random numbers already implemented on its standard library (stdlib.h). To get a random number in C we call the rand() function, which returns a pseudo random number between 0 and RAND_MAX (stdlib constant). You can also set the seed for the PRNG with srand(). For the purposes of our experiment, we are interested in scaling down the range of numbers generated to any range we want. One way of doing this is with the following code:

int randomInt(int min, int max){

return min + rand()*(max-min+1)/(RAND_MAX + 1);

}

NOTE: Another popular way of doing this is with min + rand() % (max-min+1), which is considered a bad approach because skews the distribution to certain numbers that will be more likely to appear than others. Depending on your application this may not be a concern but it’s usually not a good idea to go that route.

It’s worth noting that scaling a PRNG range “up” will generate holes in your distribution if the PRNG can only return integer values. For example if the MAX_RAND of the generator you are using is 1000, and you want a to draw numbers between 1 and 4000, you’ll need to scale up the value returned by 4, which will cover the whole range you requested, but skipping a lot of numbers, as you’ll only get things like 0, 4, 8, … 200 … 4000, which correspond to the 1000 possible numbers that the PRNG can return, multiplied by the proper scaling constant.

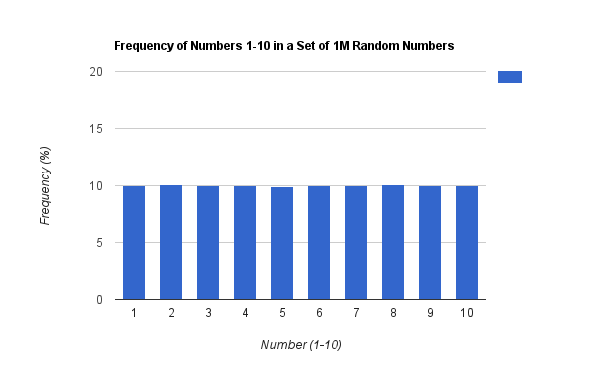

But back to our simpler problem; If I use the function above to generate 1 million random numbers between 1 and 10, recording their frequencies and later plotting them in a fancy graph, this is what I get:

As you can see, statistically speaking each number had a nearly perfect 10% (1 out of 10) chance of being drawn. Sounds nice right? Sure, but before we celebrate success we should check what the actual sequence of numbers looks like.

Reality Check #1: Small-scale uniformity is hard

Here you can see the first 100 numbers generated:

7 6 5 7 10 6 8 2 1 6 5 1 1 9 6 7 3 1 10 2 8 8 7 4 6 10 1 10 9 10 3 4 3 8 2 8 5 2 1 10 3 3 8 9 1 3 5 1 10 5 7 8 8 9 2 5 9 8 1 1 1 2 6 8 5 8 5 1 3 10 2 10 5 5 2 4 1 8 8 9 10 9 8 1 5 1 10 1 8 2 2 7 2 7 1 1 8 6 6 1

If you look closely, we got the first “4” after 23 other numbers! You can also see that there’s an unusually high amount of “1”s!

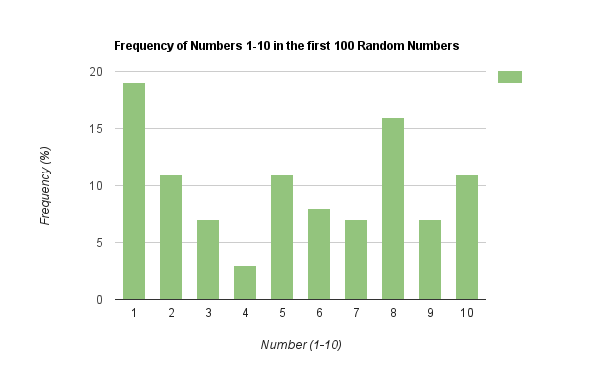

The frequencies for this particular set look like this:

… Where uniformity is nowhere to be found.

The problem with this is that often in games you rely on randomness to place things like enemies, or simulate dice throws or calculate damage and experience points. Things like a 4 appearing only three times in a set of 100 throws will look really bad and the player will probably notice. As you won’t normally draw a million numbers at once in a game, a sequence that is not “uniform” on short bursts is kinda terrible.

Reality Check #2: The standard PRNG in every language is probably just as bad

The PRNG is most-likely the culprit here, as uniformity is one of the things that the PRNG is in charge of. The method we used to scale down the random number to a range also affects the result, but we are using the approach that should give the best result (although it can never be “perfect”, because it’s impossible to distribute a fixed range of numbers -the output of your PRNG- over an arbitrarily sized domain in a perfectly even fashion).

As previously mentioned, most programming languages have a PRNG implemented as part of their standard libraries. Perhaps with some other language we’ll get better results right? RIGHT?!

Well here comes the bad news; It turns out that a vast majority of languages implement what is called a LCG (Linear Congruential Generator) as their core random generator, and of course they all suffer from the same problem. They all use the exact same model, just with different “values” for the constants, which affect mainly the periodicity of the function.

Here’s in fact a Microsoft’s Knowledge Base article that gives pseudo-code to implement VB’s internal PRNG. And the first thing they tell you is that it’s a standard LCG. That’s official documentation from Microsoft!

Translated to C, VB’s PRNG would be implemented as follows:

unsigned long seed = 0x50000L;

unsigned long rand2(){

seed = (seed * 1140671485L + 12820163L) % 16777216L;

return seed;

}

If you write that in your code editor of choice, you’ll get a completely functional PRNG in C that will behave exactly like VB’s Rnd() function given the same starting seed.

This follows the general formula for the LCG algorithm: X[n+1] = ( a*X[n] + c ) mod m

Now, the Wikipedia entry for LCG not only contains the description of the LCG model, but also the parameters used by a number of different programming languages (including VB) for their LCGs as well, and if you plug the values that appear for VB in the formula above, you’ll arrive to the code I just gave you. This means that you can change the values above and replicate the random function of a lot of different languages, if you so desire.

More importantly, this tell us that our chances of escaping the sucky-ness of rand() by using a different language are really slim. For a lot of uses an LCG is more than enough, though. It has its drawbacks but has the advantage of being one of the fastest methods out there to get (pseudo) random numbers and it’s very easy to implement and port across platforms.

Now, one important thing to notice is that the code above is already potentially superior to some implementations of LCG available in several (especially old) C compilers, as RAND_MAX (normally the “m” parameter of the model) varies among implementations and in some very ugly cases it might be no bigger than 32767, which presents a lot of problems starting with a really limited range of values. Other implementations introduce several “minor” modifications to the formula which can totally kill the PRNG, and that’s why you normally see so many people recommending against the default rand(). You never know what you are getting there.

Other Random Generators

Of course LCG is not the only method that exist to create pseudo randomness. It’s common for modern languages to include several options aside from a LCG in their base libraries, including some very robust algorithms.

A theoretically better (albeit more complex and a bit slower) alternative is the Merssene Twister algorithm. I tried a 32 bit implementation (MT19937) in C, and long story short: It exhibits similar behavior over small sets of numbers although with slightly better uniformity over a long sequence and an insanely superior periodicity, that makes it better choice for more serious stuff. The problem is that despite being more complex than LCG, none of its strengths shine in what we commonly need in games (few random numbers here and there), so it isn’t really a “better” choice for the simpler cases. Unless you need a huge amount of random numbers in a more uniform distribution (i.e: procedural generation) then I don’t think you have much to gain from switching to MT.

For what I normally do I’m pretty fine with an LCG, but if you feel like it’s too weak and broken to be used, I can recommend you to check the book “Numerical Recipes in C”, written by a bunch of brilliant people, which contains several alternatives including “Park and Miller’s method with Bays-Durham shuffle and added safeguards”, which despite the mouthful of a name is a pretty compact and relatively portable generator that should behave better than LCG, but without reaching the complexity of MT.

Reality Check #3: Building from the solution up is sometimes the answer

If your game needs random events that are “fair” in the short term, you can always change the approach. Instead of trying to magically get a fair list of numbers over a short amount of calls to your PRNG, start with a fair set and then select numbers randomly from it. The idea is to take numbers out of this pool using any PRNG of your choosing. When you take a number from the pool, this number should be removed so it won’t be drawn again until the whole set is exhausted.

Furthermore, if you use the “fastest” delete that can be performed on an array (swapping the selected element with the last element and then reducing the “array counter” by one) you also get the secondary benefit of restructuring the array after each call, leaving it completely “shuffled” by the moment you reach the end of it, which further improves randomness on later calls.

An implementation of this method that guarantees that each 20 calls, every number from 1 to 10 would have been drawn exactly 2 times would be as follows:

#define RND_POOL_SIZE 20

int random_set[RND_POOL_SIZE];

int rnd_arr_cnt = 0;

void rnd_init() {

int i;

srand (time(NULL));

/* Populate our pool iterating over the sequence of numbers 1..10 */

for (i=0;i<RND_POOL_SIZE;i++) random_set[i] = (i % 10) + 1;

}

int random_1_to_10(){

int ndx, elem;

/* If we have drawn all the elements from the pool, reset its size

counter. As we never really removed the elements, we will now have a

pool with all the same values we started with. If this is not the first

call, all the elements in the pool would also be "shuffled" because of

previous draws. */

if (rnd_arr_cnt < 1) rnd_arr_cnt = RND_POOL_SIZE;

/* Pick an index from the pool at random */

ndx = rand()*(rnd_arr_cnt)/(RAND_MAX+1.0);

/* Get the number stored at that position, and swap it with the last

element in the pool. By doing this, we bring the last element to our

current position, and after we reduce the size counter we would have

successfully "eliminated" the number we just drew from the pool */

elem = random_set[ndx];

random_set[ndx] = random_set[rnd_arr_cnt-1];

random_set[rnd_arr_cnt-1] = elem;

rnd_arr_cnt--;

return elem;

}

Of course this method guarantees perfect uniformity every RND_POOL_SIZE calls to random_1_to_10(), as long as RND_POOL_SIZE is a multiple of 10 (the number of different elements we are putting into the pool), which also means that’s it’s perfectly uniform every 2*RND_POOL_SIZE , 3*RND_POOL_SIZE, …. 1000*RND_POOL_SIZE calls, etc.

Let’s see an example of 100 numbers drawn using this method:

2 6 5 9 3 9 8 7 10 1 6 4 8 3 1 2 7 4 5 10 2 7 6 9 8 5 1 4 2 7 5 10 9 3 1 10 3 4 6 8 8 6 1 10 8 9 10 6 2 9 7 3 3 2 5 4 5 4 1 7 9 9 4 1 5 2 6 3 2 10 4 8 8 10 3 6 1 7 5 7 7 5 1 8 6 4 5 4 9 6 3 9 2 10 8 7 1 10 2 3

Since we set RND_POOL_SIZE to 20, each 2 lines of this set contains every number from 1 to 10 exactly twice, and as expected, in the whole set of 100 numbers presented here, each number appears exactly 10 times.

One advantage of this method is that it doesn’t require a sophisticated PRNG to achieve perfect uniformity over arbitrarily small sets. In the example above I’ve used the default rand() function of C, but I could have used my implementation of LCG, MT, or any method discussed here.

Bear in mind however that this technique may not be the best approach for your particular case, but In general if you are programming a game where the player’s enjoyment and their experience with the world heavily relies on random events (like random enemy encounters) it’s a good idea to implement something like this, where you can guarantee that each X events, there will be an equal number of good and bad outcomes.

While uniformity can keep things “fair”, you will find that it’s not always something good or desired. Sometimes it can hurt the expected experience and become a real problem in your game. In the next post I will talk about non-uniform distributions and how to implement the most common one using what we have covered so far and some clever algorithms.